ruby-changes:62150

From: Aaron <ko1@a...>

Date: Tue, 7 Jul 2020 06:18:25 +0900 (JST)

Subject: [ruby-changes:62150] b06a4dc6f1 (master): Expand heap pages to be exactly 16kb

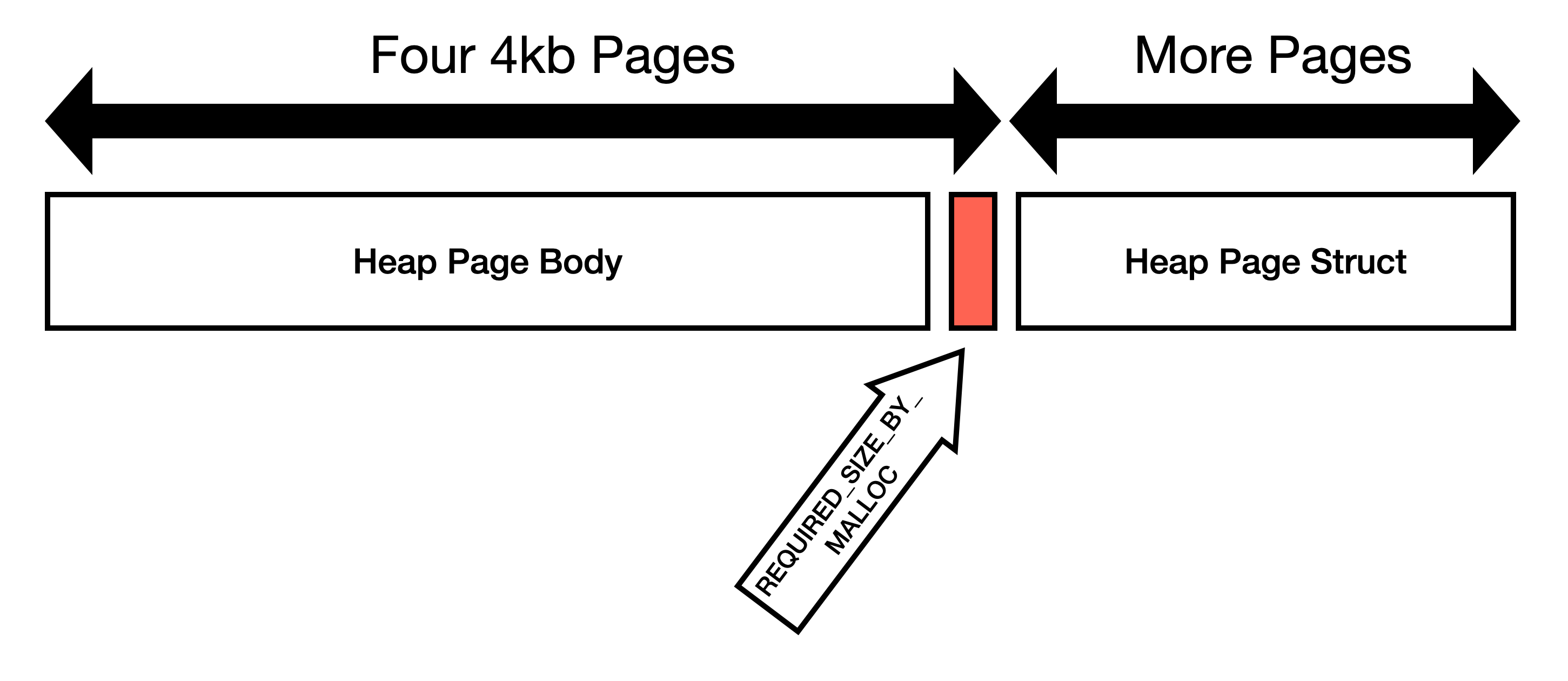

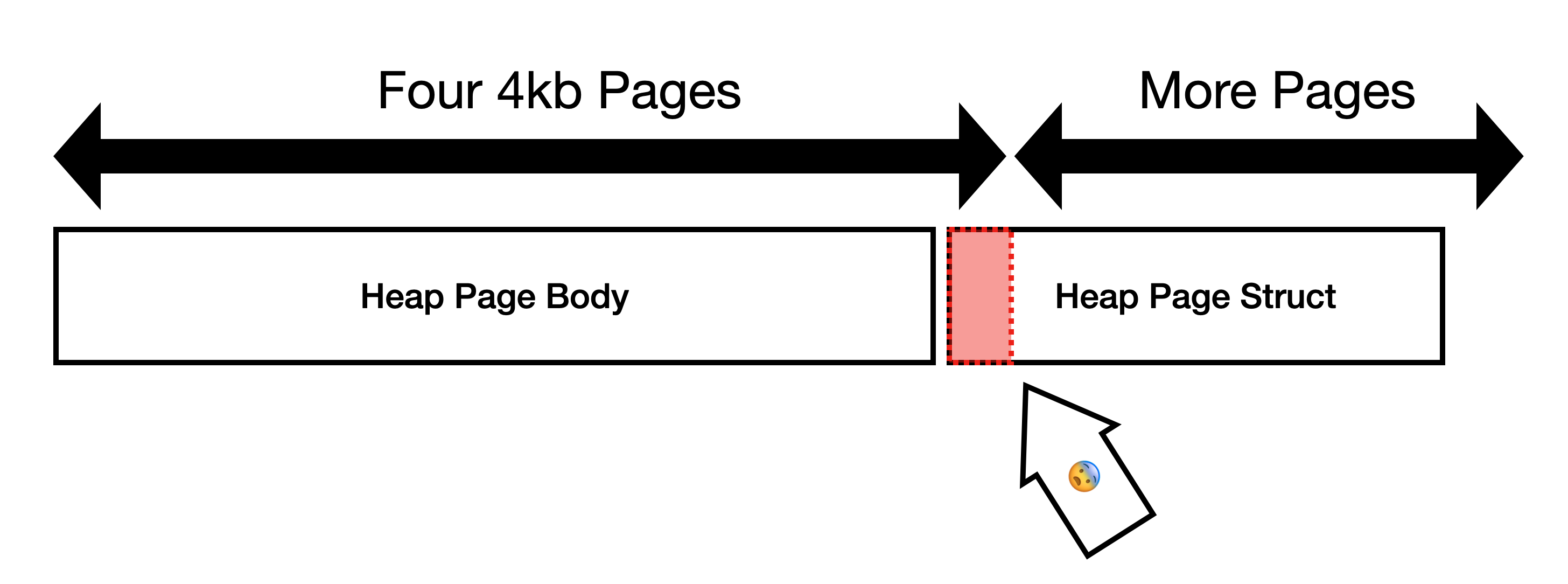

https://git.ruby-lang.org/ruby.git/commit/?id=b06a4dc6f1 From b06a4dc6f1fbef12ff7b4d57e7b5f12fd1f6cb5b Mon Sep 17 00:00:00 2001 From: Aaron Patterson <tenderlove@r...> Date: Thu, 25 Jun 2020 15:20:25 -0700 Subject: Expand heap pages to be exactly 16kb This commit expands heap pages to be exactly 16KiB and eliminates the `REQUIRED_SIZE_BY_MALLOC` constant. I believe the goal of `REQUIRED_SIZE_BY_MALLOC` was to make the heap pages consume some multiple of OS page size. 16KiB is convenient because OS page size is typically 4KiB, so one Ruby page is four OS pages. Do not guess how malloc works ============================= We should not try to guess how `malloc` works and instead request (and use) four OS pages. Here is my reasoning: 1. Not all mallocs will store metadata in the same region as user requested memory. jemalloc specifically states[1]: > Information about the states of the runs is stored as a page map at the beginning of each chunk. 2. We're using `posix_memalign` to request memory. This means that the first address must be divisible by the alignment. Our allocation is page aligned, so if malloc is storing metadata *before* the page, then we've already crossed page boundaries. 3. Some allocators like glibc will use the memory at the end of the page. I am able to demonstrate that glibc will return pointers within the page boundary that contains `heap_page_body`[2]. We *expected* the allocation to look like this:  But since `heap_page` is allocated immediately after `heap_page_body`[3], instead the layout looks like this:  This is not optimal because `heap_page` gets allocated immediately after `heap_page_body`. We frequently write to `heap_page`, so the bottom OS page of `heap_page_body` is very likely to be copied. One more object per page ======================== In jemalloc, allocation requests are rounded to the nearest boundary, which in this case is 16KiB[4], so `REQUIRED_SIZE_BY_MALLOC` space is just wasted on jemalloc. On glibc, the space is not wasted, but instead it is very likely to cause page faults. Instead of wasting space or causing page faults, lets just use the space to store one more Ruby object. Using the space to store one more Ruby object will prevent page faults, stop wasting space, decrease memory usage, decrease GC time, etc. 1. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf 2. https://github.com/ruby/ruby/commit/33390d15e7a6f803823efcb41205167c8b126fbb 3 https://github.com/ruby/ruby/blob/289a28e68f30e879760fd000833b512d506a0805/gc.c#L1757-L1763 4. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf page 4 Co-authored-by: John Hawthorn <john@h...> diff --git a/gc.c b/gc.c index a2a7997..d1af8ba 100644 --- a/gc.c +++ b/gc.c @@ -805,8 +805,7 @@ typedef struct rb_objspace { https://github.com/ruby/ruby/blob/trunk/gc.c#L805 enum { HEAP_PAGE_ALIGN = (1UL << HEAP_PAGE_ALIGN_LOG), HEAP_PAGE_ALIGN_MASK = (~(~0UL << HEAP_PAGE_ALIGN_LOG)), - REQUIRED_SIZE_BY_MALLOC = (sizeof(size_t) * 5), - HEAP_PAGE_SIZE = (HEAP_PAGE_ALIGN - REQUIRED_SIZE_BY_MALLOC), + HEAP_PAGE_SIZE = HEAP_PAGE_ALIGN, HEAP_PAGE_OBJ_LIMIT = (unsigned int)((HEAP_PAGE_SIZE - sizeof(struct heap_page_header))/sizeof(struct RVALUE)), HEAP_PAGE_BITMAP_LIMIT = CEILDIV(CEILDIV(HEAP_PAGE_SIZE, sizeof(struct RVALUE)), BITS_BITLENGTH), HEAP_PAGE_BITMAP_SIZE = (BITS_SIZE * HEAP_PAGE_BITMAP_LIMIT), @@ -4187,20 +4186,20 @@ gc_page_sweep(rb_objspace_t *objspace, rb_heap_t *heap, struct heap_page *sweep_ https://github.com/ruby/ruby/blob/trunk/gc.c#L4186 { int i; int empty_slots = 0, freed_slots = 0, final_slots = 0; - RVALUE *p, *pend,*offset; + RVALUE *p, *offset; bits_t *bits, bitset; gc_report(2, objspace, "page_sweep: start.\n"); sweep_page->flags.before_sweep = FALSE; - p = sweep_page->start; pend = p + sweep_page->total_slots; + p = sweep_page->start; offset = p - NUM_IN_PAGE(p); bits = sweep_page->mark_bits; /* create guard : fill 1 out-of-range */ bits[BITMAP_INDEX(p)] |= BITMAP_BIT(p)-1; - bits[BITMAP_INDEX(pend)] |= ~(BITMAP_BIT(pend) - 1); + bits[BITMAP_INDEX(p) + sweep_page->total_slots / BITS_BITLENGTH] |= ~((1 << ((NUM_IN_PAGE(p) + sweep_page->total_slots) % BITS_BITLENGTH)) - 1); for (i=0; i < HEAP_PAGE_BITMAP_LIMIT; i++) { bitset = ~bits[i]; -- cgit v0.10.2 -- ML: ruby-changes@q... Info: http://www.atdot.net/~ko1/quickml/